Introduction

Lately, Foundational AI models, and Large Language Models (LLMs) in particular, have amazed the world with their powerful ability to generate human-like text given a carefully crafted prompt. You may have heard the news about Open AI’s ChatGPT or Google’s Bard helping students write essays,, but what exactly are these systems?

These systems are also referred to as Generative AI since they can generate text, images (DALL-E), or audio by using the patterns learned from vast amounts of data.

In the particular case of LLMs, they are trained on large corpus of text in natural language.

Chat GPT is a web interface designed to interact with models, part of the GPT-n series, developed by OpenAI. GPT stands for Generative Pre-trained Transformers. The concept for this model was first introduced by OpenAI in 2018 and these are artificial neural networks with a transformer architecture.

Their latest release was GPT-4 on March 14, 2023. This update showed an increase in word limit, reasoning, and understanding, multi-modal.

Besides the web interface, which allows humans to interact with the AI models, OpenAI offers an API with multiple endpoints. Each endpoint offers various models, you need to specify the model you want to use in the request and format it in the correct way as described in the documentation.

Here, we’ll analyze some of the models that you can use and options to manage some of the limitations that the API imposes on requests, as well as managing data connections.

ChatGPT vs API

ChatGPT has a simple conversational text interface for human interaction and retains context to prior exchanges. You can also interact with the models via Open AI’s API. In such a case, every request is a fresh start, and no information about previous interactions is saved, among other constraints.

For example, let’s say you want to try the gpt-3.5-turbo model to have a conversation. To send an http POST request, you need to specify the model, and the message as an array of objects with two fields Role and Content, which comprises your prompt. Also, you may want to vary something called the Temperature, this is a number between 0 and 1, and controls the randomness of the model, with 0 being a more deterministic answer and 1 being riskier, to put it in terms of probabilities.

One thing to keep in mind is that these models are not deterministic, so sending the same prompt multiple times will output different answers.

These models work by splitting text into tokens that can be words, chunks of characters, or single characters, and the trained model predicts which text or completion is a better fit for your prompt. Every model on the API has a token limit, in this particular case the limit is 4, 096 tokens that are split between your prompt and the model's answer, so, the longer the prompt, the shorter the response, although it is more than enough for most simple tasks.

To avoid some of the constraints, other tools have been created to facilitate usage. For example, the llama-index data framework helps you connect your data sources, index the data, and create queries based on that data. This means that the context constraint is gone.

One important thing to consider is cost, using the text-davinci-003 model costs $0.02 for 1k tokens, and using gpt-3.5-turbo model costs $0.002 for 1k tokens. Costs can grow quickly with volume.

Calling the API using Python

First, we need to install the necessary packages. OpenAI offers its own Python library for calling the API, the llama index library, and python-dotenv to read the API key from environment variables:

Pip install openai, llama-index, python-dotenv

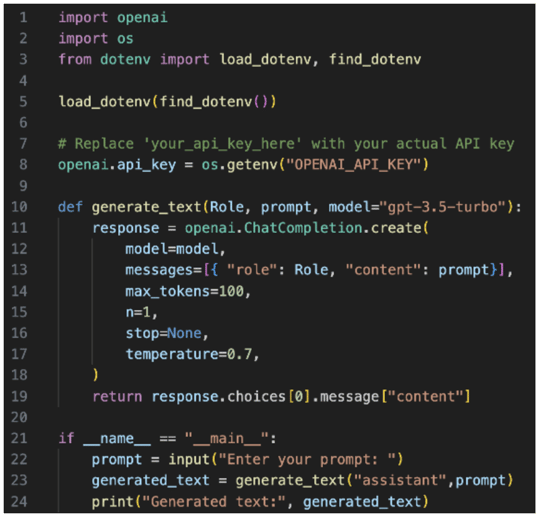

Then call the gpt-3.5-model as referenced below:

This is a Python script to make a normal request. We first need to import os and dotenv packages to load the API key. It is a best practice to not add the key explicitly in the code. Second, we have a function generate_text which receives a Role and prompt values.

Inside the generate_text function we call the ChatCompletion.create() method to make the request. This method receives the model we want to use (gpt-3.5-turbo), the messages we want to send (this is an array of objects with a role field that can be assistant, system or user), and content, which is our prompt. We also define the max_tokens we want the completion to have, n the number of completions we want, and stop as None. This field tells the model to stop generating text when it encounters that sequence of text, usually at the end of a conversation. And finally, we have the Temperature value, set at 0.7 to get an interesting response.

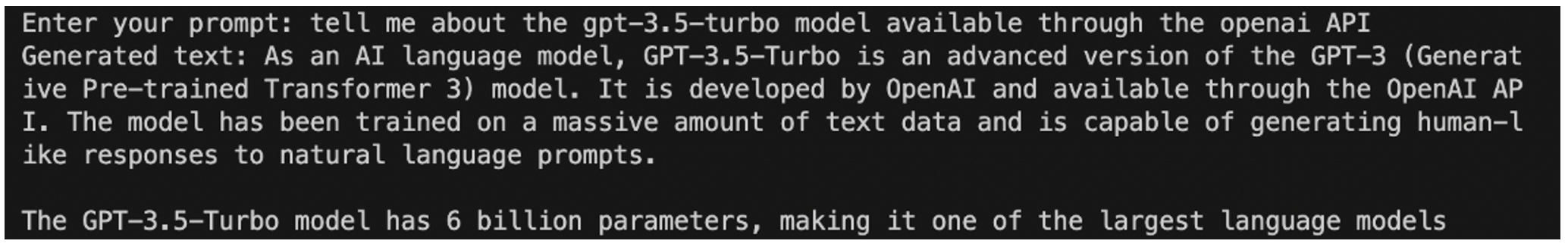

We can use this script to communicate with the model:

As you can see, the response is very well written and illustrative about the 6 billion parameters. This is a very big model.

Now, let's say we want to ask questions about data we have ourselves, on a text file. Now we need to implement the call using the llama index, so we modify the generate_text function to the following code:

On line 12 we add the path to the directory where our data file is located. In this case it is a .txt file with the draft for this post, so we can query using this text. Lines 14 to 32 is our modified function. First, we call LLMPredictor which wraps the connection between Langchain’s LLMChain and llama index.

Then we define the max number of tokens for the input, for the output, and the overlap between them.

Then the prompt helper is defined to deal with token limitations. This function can split and concatenate text.

The service context is a utility container for the index and query classes. It contains the llmpredictor and prompt helper.

Next, we read the directory where our data is saved and create the index with the documents and service context.

And finally, we create the index with our documents and context. Once we get this, we are ready to send prompts related to our documents.

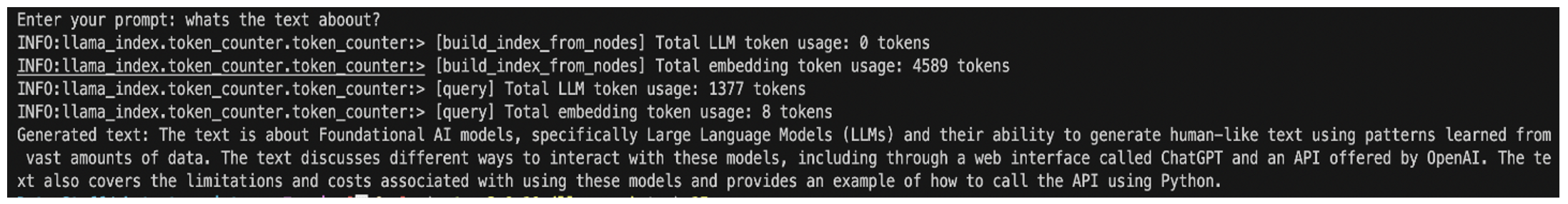

Since the text file contains the draft for this post, we query the model to check if it is able to understand the context of this text.

As one can see from the answer, even though this is not the most advanced model, it gets the context of the article very well.

Key Takeaways

- Large Language Models are huge neural networks with billions of parameters, trained on a large amount of text from the internet.

- There are lots of things to consider when using the API. Calls for each model may look different, and may have different costs, or capabilities.

- Tools like llama-index or long-chain can help you manage these constraints and overcome some of them

References:

- https://www.youtube.com/watch?v=hfIUstzHs9A

- https://en.wikipedia.org/wiki/Foundation_models#:~:text=A%20foundation%20model%20(also%20called,wide%20range%20of%20downstream%20tasks

- https://en.wikipedia.org/wiki/Generative_pre-trained_transformer

- https://towardsdatascience.com/what-gpt-4-brings-to-the-ai-table-74e392a32ac3#:~:text=GPT%2D4%2C%20like%20its%20predecessors,a%20supervised%20discriminative%20fine%2Dtuning

- https://cdn.openai.com/papers/gpt-4.pdf

- https://levelup.gitconnected.com/connecting-chatgpt-with-your-own-data-using-llamaindex-663844c06653

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.