An important pitfall to avoid when designing production-grade machine learning systems is called data leakage. Data leakage happens when a model is trained using information about the target variable that will not be available when the model is released into production. As a consequence, the reported performance of the model based on the training and validation sets will probably be very high, but it will not correspond to the performance observed in the production scenario. In some cases, the model may not be even deployable at all. There are many different contexts in which data leakage can arise, each with its own set of considerations and strategies to identify them. This article goes over a few of them.

Consider the following classic example from Kohavi et. al. We want to train a customer churn prediction model for a service that our company provides. One apparently harmless feature we could use is the “interviewer name” feature. Imagine that we train a model using this feature, and it achieves an extremely high test-set accuracy (for instance, over 98%). After noticing that the performance in production does not match the metrics observed during training, we discover that the company has a specific interviewer dedicated to negotiate with customers who have already expressed their desire to quit the service. By using this feature, we are introducing data leakage: we only know that the customer spoke to that specific interviewer after we already discovered about his intention to churn!

This kind of data leakage is often referred to as “target leakage” because information about the target is leaking into a feature that was actually computed after the target was already known. The “dead giveaway”, as the authors call it, is the reason for the extremely high test accuracy. “If it is too good to be true, it probably is” is a piece of common wisdom that certainly applies to machine learning. However, while here the symptoms and cause of data leakage were easy to identify, this is not always the case. In some cases, the performance gap might be smaller, and if the data leakage only affects a subset of the data, it could only be discernible after extensive exploratory analysis. While there is no clear recipe to detect all sources of data leakage, it helps to be suspicious and examine the features for signs of near-perfect correlations with the target variable as a first step. If your algorithm gives feature importance, that can also guide further investigation into other questionable features.

Variables generated after the moment a prediction is made are not usable.

In order to avoid target leakage, it is critical to have domain knowledge and a deep understanding of the meaning of the variables that are being fed into the model. The model developer should use this knowledge to identify and remove any features that may have been influenced by information obtained after the target variable has been observed, like the “interviewer name”. Data leak can also be obscured behind aggregations or data transformations that may reference the target variable directly or indirectly, so it is important to know not only the meaning of the features, but also their full lineage.

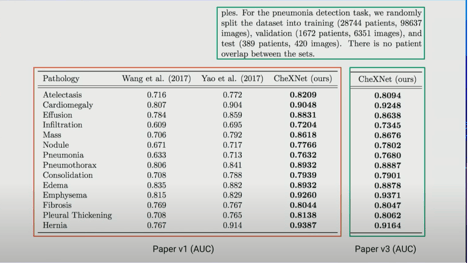

Another scenario in which data leakage can occur is when the training data contains multiple instances of a group or entity, also known as group leakage. Yuriy Guts tells the story of how he identified group leakage in a preprint of a paper from a research group led by none other than the renowned Andrew Ng. The paper was about identifying pneumonia and other pathologies from chest X-Rays using deep learning, and the team split a dataset of 112,120 images from 30,805 distinct patients. Yuri correctly pointed out that since there is more than one image per patient, by performing a simple train-test splitting, images of patients could end up in both the training and validation sets, thus giving the opportunity to the model to learn from the idiosyncrasies of a patient, such as scars or other body indicators, rather than from the actual features of a pathology. To make matters worse, deep learning models are particularly sensitive to “cheating” by relying on potentially irrelevant features, as illustrated by humorous adversarial examples such as the picture of a green apple with the handwritten text “Apple” being classified as an iPod by a neural network. In Ng’s case, indeed the mistake was corrected and, as a result, many of the AUC metrics for pathology detections decreased by a non-trivial amount.

Andrew Ng’s paper’s metrics changes due to fixing data leakage.

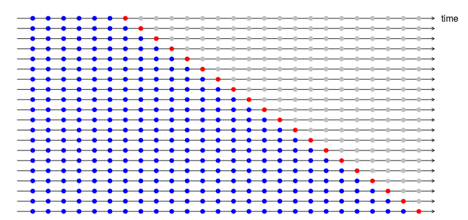

In time series forecasting, wrongful data splitting is also a common culprit of data leakage. Performing a random train-test split on a time series dataset, where the goal is typically to predict future outcomes, will have the unfortunate result of training the model on future time periods and evaluating on past time periods. This means that whatever metrics are produced by that evaluation will not match the performance of the model when used to only predict future outcomes. This may be obvious to anyone who has previously worked on time series data, but it is a common beginner mistake. The solution here is to split training and testing data according to a set date point, which is a commonly included functionality on most time-series prediction libraries. A more sophisticated approach is to perform cross-validation on a rolling forecast. In this process, a portion of the time series is used to train a model, and evaluation is performed on the latest point of the time series. Then, the time series is rolled out to include more data points, and the evaluation becomes the latest point after the rollout. The test accuracy is averaged over all evaluations. Not only does this prevent data leakage, it also yields a more robust estimation of test performance.

Example of rolled out cross validation in forecasting.

Data leakage can also result as part of faulty data preprocessing steps. For example, if you need to perform variable imputation, but the imputer is fit on the whole dataset before splitting the data into training and testing, information from the distribution of the validation set is leaking into the imputer, so the validation performance is likely to be higher than what it would actually be on completely unseen data. This is called train-test contamination and can generally be avoided by splitting before performing any fitting or preprocessing steps.

A good practical tip to avoid leakage issues associated to data processing is to always use a pipeline framework, such as sklearn pipelines . Sklearn allows you to assemble your training steps sequentially into a pipeline model. First the pipeline is set up, specifying data transformations and estimators such as imputers, normalizers, dimensionality reduction, etc. Then, the data is split into training and validation sets. The training data is fed into the pipeline, the estimators are fit and the model is trained. Finally, the validation set is passed to the trained pipeline to generate predictions and evaluate the model. This guarantees that any preprocessing steps that store some kind of state (like means, observed values, etc.) will function exclusively based on the data observed during training. Here is an example of a simple pipeline:

from sklearn.linear_model import LogisticRegression

from sklearn.impute import SimpleImputer

from sklearn.model_selection import train_test_split

from sklearn.pipeline import Pipeline

from sklearn.datasets import make_classification

# Generating synthetic data

X,Y = make_classification(n_samples=1000,n_features=6,n_classes=2)

# Setting up the pipeline with two steps: imputation and logistic regression

training_pipeline = Pipeline([

('imputer',SimpleImputer(missing_values=np.nan, strategy='mean')),

('logistic_regression', LogisticRegression())

])

# Fitting the imputer and the logistic regression on exclusively training data

pipeline_model = training_pipeline.fit(train_X,train_Y)

# When predicting and evaluating, the pipeline has already fixed

# the values of the imputer based on training data, so there is no leak

print(f"Training accuracy: {pipeline_model.score(train_X,train_Y)}")

print(f"Test accuracy: {pipeline_model.score(test_X,test_Y)}")

We have reviewed several kinds of data leakage along with common examples, including target leakage, group leakage, leakage in time series data and train-test contamination. We have also proposed several practical recommendations to avoid or detect data leakage. Training and validation should be split as early as possible, and ML pipeline frameworks are recommended to ensure only training data influences the model. When using time series data, time-based splitting should be favored over random splitting. If the training data contains multiple instances of an entity or a group, additional care must be taken to prevent instances of the same group being in both the training and the validation sets. Finally, features that are derived from the target variable must be removed, which requires understanding of the features, domain expertise and data exploration. This is by no means an exhaustive recipe to get rid of all leaks. A good ML practitioner should rely on a good intuition and always be on the lookout for “smells” that lead to a potential data leakage. And never forget the old adage, if the model seems too good to be true, it probably is.

References

- *Ron Kohavi, Carla E. Brodley, Brian Frasca, Llew Mason, and Zijian Zheng. 2000. KDD-Cup 2000 organizers' report: peeling the onion. SIGKDD Explor. Newsl. 2, 2 (Dec. 2000), 86–93. https://doi.org/10.1145/380995.381033* https://dl.acm.org/doi/10.1145/380995.381033

- Shachar Kaufman, Saharon Rosset, Claudia Perlich, and Ori Stitelman. 2012. Leakage in data mining: Formulation, detection, and avoidance. ACM Trans. Knowl. Discov. Data 6, 4, Article 15 (December 2012), 21 pages. https://doi.org/10.1145/2382577.2382579 https://www.cs.umb.edu/~ding/history/470_670_fall_2011/papers/cs670_Tran_PreferredPaper_LeakingInDataMining.pdf

- Alexis Cook, Data Leakage Tutorial https://www.kaggle.com/code/alexisbcook/data-leakage/tutorial

- Yuri Guts, Target Leakage in Machine learning. Conference presentation at AI Ukiraine, https://www.youtube.com/watch?v=dWhdWxgt5SU

- Rob Hyndman, Cross-validation for time series. https://robjhyndman.com/hyndsight/tscv/.

- Sklearn pipelines documentation. https://scikit-learn.org/stable/modules/generated/sklearn.pipeline.Pipeline.html

- 'Typographic attack': pen and paper fool AI into thinking apple is an iPod, The Guardian https://www.theguardian.com/technology/2021/mar/08/typographic-attack-pen-paper-fool-ai-thinking-apple-ipod-clip

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.