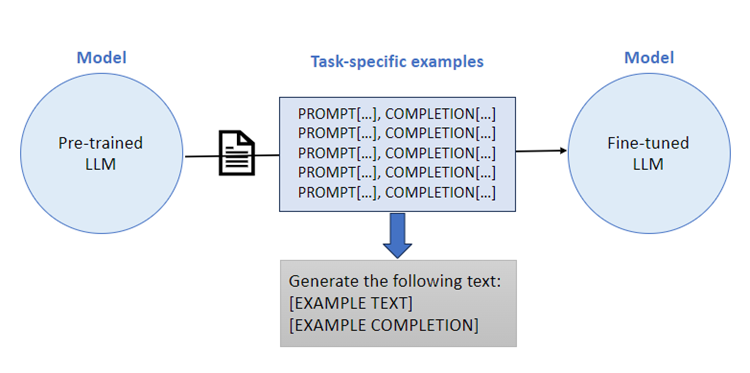

Fine-tuning, a transformative process in the realm of natural language processing, empowers us to adapt pre-trained language models to nuanced tasks. In this exploration, we delve into the intricacies of fine-tuning, with a focus on Facebook's BART (DistilBART), applied to the challenge of generating medical summaries from JSON data. The spotlight on DistilBART brings forth its efficiency and prowess in handling complex language understanding and generation tasks.

Understanding Fine-Tuning

Fine-tuning is the process of taking a pre-trained language model and adapting it to perform a specific task or function. Unlike training a model from scratch, fine-tuning capitalizes on the knowledge embedded in pre-existing models, making it a more resource-efficient approach.

Fine-tuning becomes indispensable in scenarios where task-specific adaptation is required. To illustrate this, let's consider a scenario in medical natural language processing. Imagine you have a pre-trained language model that has not undergone fine-tuning, and you want it to generate medical summaries from unstructured text containing medical jargon and abbreviations.

Example Scenario

Non-Fine-Tuned Model:

- Input Prompt: "Patient presents with elevated blood pressure, bilateral breath sounds, biopsy, MRI, and ECG."

- Output: The non-fine-tuned model might struggle to accurately interpret and generate summaries from the medical abbreviations like "MRI" (Magnetic Resonance Imaging), "ECG" (Electrocardiogram), and might not recognize subtle variations like "BP" (Blood Pressure) and "BX" (Biopsy). Consequently, it could produce less accurate or nonsensical summaries due to a lack of specific medical domain knowledge.

Fine-Tuned Model:

- Input Prompt: "Patient presents with elevated blood pressure, bilateral breath sounds, biopsy, MRI, and ECG."

- Output: In contrast, a fine-tuned model, having been trained on medical data and fine-tuned for medical language, would better understand and generate more accurate summaries. It recognizes and interprets abbreviations like "BP," translates them to "Blood Pressure," and comprehends complex terms like "MRI" and "ECG," producing contextually relevant summaries.

In this scenario, the input prompt is indeed the same for both the non-fine-tuned and fine-tuned models. The critical difference lies in the models' abilities to understand and generate accurate summaries based on that input prompt, with the fine-tuned model performing better due to its familiarity with medical domain-specific language.

Fine-Tuning Process

1.Data Preprocessing:

In the initial phase, you prepare your dataset for fine-tuning through a series of steps, including cleaning, partitioning into training, validation, and test sets, and ensuring compatibility with the model. Effective data preparation is crucial for the subsequent stages of the process.

Example:

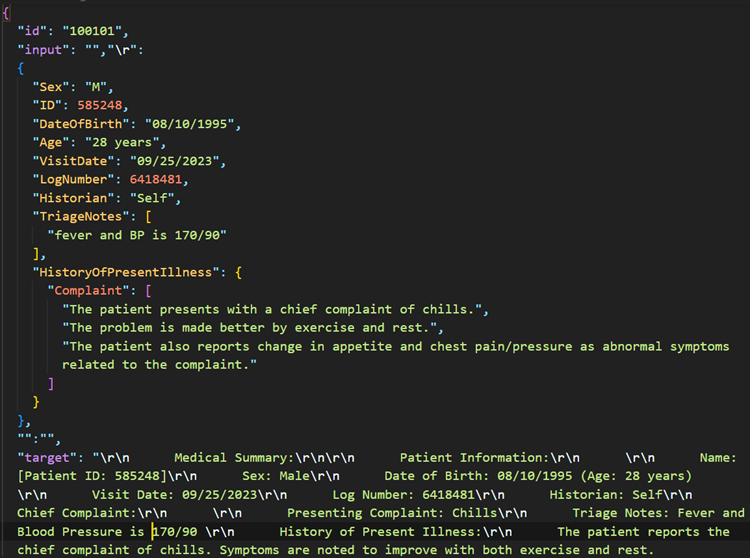

Consider a JSON dataset containing medical records with structured fields like "patient_history," "diagnosis," and "treatment_plan." Clean the data by removing irrelevant entries, and tokenize the medical records for effective processing.

I have splitted my data into train.json - 80%, test.json - 10% and val.json - 10%

Sample Dataset:

Sample Dataset:

2. Model Initialization:

Commence the process by initializing a pre-trained Large Language Model (LLM), like GPT-3 or GPT-4, with its pre-existing weights. These models have acquired extensive knowledge from a diverse range of textual data, rendering them potent initial candidates for the fine-tuning endeavor.

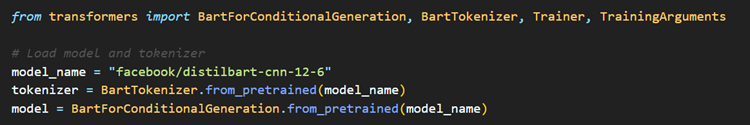

For instance, let's consider the use of Facebook's DistilBART as the chosen pre-trained model for this training initiative. This model, having undergone pre-training on substantial text corpora, serves as a robust foundation for further fine-tuning efforts.

3. Task-Specific Architecture:

Task-Specific Layer: A task-specific layer is a tailored component within a model's architecture designed to address the nuances and requirements of a particular task. These layers are added or adjusted to enhance the model's proficiency in handling specific tasks while retaining its broader language understanding capabilities acquired during pre-training.

For example, in the context of medical summary generation using DistilBART, you can customize the architecture by incorporating task-specific layers. These layers are strategically added or modified to optimize the model's performance in generating medical summaries from structured data. This customization ensures that the model becomes adept at the intricacies of the medical domain while leveraging its pre-existing language understanding capabilities.

4. Training:

Once the customized architecture is established, the model undergoes training using a task-specific dataset. Throughout the training process, the model's weights are iteratively adjusted via backpropagation and gradient descent, responding to the information presented in the dataset. This iterative learning process enables the model to discern and internalize patterns and relationships specific to the designated task.

Example with DistilBART Training: To illustrate, consider the scenario of training a model, such as DistilBART, for medical summary generation. The modified architecture, designed to capture nuances in medical data, is employed in the training process. As the model processes the medical dataset, its weights are fine-tuned, allowing it to grasp intricate medical patterns and relationships. This targeted training approach ensures the model's adaptability and proficiency in generating accurate medical summaries.

- Task: Generating concise summaries from EMR/EHR

- Training Data: Annotated dataset of EHRs/EMRs containing patient information, diagnoses, treatments, and outcomes.

- Input: "Patient presented with SOB and elevated BP. DX: CAD. Rx: ASA, NTG. Follow-up: ECG, LDL."

- Output: "Patient presented with shortness of breath (SOB) and elevated blood pressure (BP). Diagnosis: Coronary Artery Disease (CAD). Treatment: Aspirin (ASA), Nitroglycerin (NTG). Follow-up: Electrocardiogram (ECG), Low-Density Lipoprotein (LDL) test."

5. Hyperparameter Tuning and Parameter Manipulation:

Hyperparameters are external configuration settings for a model crucial for optimizing the training process and achieving superior model performance. Fine-tuning involves manipulating key hyperparameters, such as learning rate, batch size, and regularization strength.

Illustrative Examples:

Learning Rate:

The learning rate controls the step size during the optimization process, determining how much the model adjusts its weights.

Manipulation: Experiment with values like 1e-5, 2e-5, 5e-5, and observe the impact on convergence.

Effect: A smaller learning rate (e.g., 1e-5) allows precise weight adjustments but may slow convergence. A larger rate (e.g., 5e-5) speeds up convergence but risks overshooting.

Batch Size:

Batch size refers to the number of training examples in one iteration, affecting computational efficiency and model stability.

Manipulation: Try different batch sizes like 8, 16, 32, or 64 to find a balance.

Effect: Smaller batches may introduce noise but aid generalization. Larger batches expedite training but might reduce model generalization.

Regularization Strength:

Regularization prevents overfitting by adding a penalty term to the loss function based on weights' magnitude.

Manipulation: Experiment with values like 0.01, 0.1, 1.0, adjusting the regularization impact.

Effect: Higher values impose stronger penalties, preventing overfitting but risking underfitting. Lower values allow flexibility but may lead to overfitting.

Iterative Tuning:

Conduct multiple experiments, adjusting one hyperparameter at a time, and observe changes in model performance. Iteratively refine hyperparameter values based on validation performance.

Training Results:

Learning Rate (learning_rate): 5e-05

Epochs: 3

Training Runtime: 155.2034 seconds

Samples per Second: 2.242

Evaluation Results:

Epoch: 3.0

Evaluation Loss: 1.4209576845169067

Evaluation Runtime: 0.6088 seconds

Samples per Second: 1343.647

These results provide insights into the model's training and evaluation performance under the specified hyperparameter configuration. Continuous experimentation and refinement of hyperparameters are essential for finding the optimal balance between training efficiency and generalization.

6. Validation:

Throughout the training process, it is crucial to closely observe the model's performance on a dedicated validation dataset. This monitoring step serves to evaluate the model's learning progress in relation to the defined task and helps identify any signs of overfitting to the training data. Adjustments can be made based on the insights gained from the validation results, ensuring the model's robustness and adaptability.

Example: For instance, in the context of training DistilBART for medical summary generation, the validation phase involves assessing how well the model captures the nuances of medical information. The validation dataset, comprising diverse medical scenarios, acts as a benchmark for the model's performance. If the model demonstrates a high level of accuracy on the training data but struggles with new, unseen medical cases in the validation set, adjustments to hyperparameters or model architecture may be necessary. This iterative validation process contributes to refining the model's proficiency in generating accurate and contextually relevant medical summaries.

7. Testing:

Following the completion of the training phase, the next critical step involves assessing the model's capabilities on an independent test dataset that it has not encountered during the training process. This phase offers an impartial evaluation, providing insights into the model's performance and its capacity to effectively process new, unseen data. The aim is to verify the model's reliability in real-world scenarios, ensuring its competence beyond the training environment.

Example: For instance, if the model was trained on cases related to cardiovascular issues, the test dataset could include scenarios from other medical domains, such as respiratory or neurological conditions. The model's performance on this unseen medical data gauges its adaptability and generalization capabilities. A successful test outcome indicates that the DistilBART model can reliably generate accurate and contextually relevant medical summaries across a spectrum of medical scenarios, affirming its practical utility.

8. Iterative Process:

Fine-tuning is frequently an iterative journey, requiring ongoing refinement based on the feedback from validation and test datasets. In response to the results obtained from these evaluation sets, it becomes necessary to iteratively adjust various elements such as the model's architecture, hyperparameters, or even the training data itself. This iterative process is essential for honing the model's performance and ensuring its effectiveness in handling specific tasks.

Example: In the context of medical summary generation with DistilBART, the iterative fine-tuning process involves continuously assessing the model's performance on diverse medical scenarios. For instance, if the initial training primarily focused on cardiovascular summaries, iterative adjustments could include introducing new medical cases related to different specialties like oncology or neurology. Fine-tuning iterations based on the validation and test outcomes enable the DistilBART model to adapt and refine its understanding of medical language nuances, ultimately enhancing its proficiency in generating accurate and relevant medical summaries.

9. Early Stopping:

Integrating early stopping mechanisms is a critical strategy to ward off overfitting during the training process. If the model's performance reaches a plateau or exhibits degradation on the validation set, early stopping allows the training to be discontinued, preventing the model from becoming overly specialized to the training data. This not only conserves computational resources but also safeguards the model's ability to generalize effectively to new, unseen data.

Example: In the domain of medical summary generation with DistilBART, early stopping acts as a safeguard against overfitting to specific medical cases. For instance, if the model, during fine-tuning, starts memorizing the intricacies of a subset of medical scenarios and struggles to generalize to new cases in the validation set, early stopping is triggered. This prevents further refinement that might compromise the model's adaptability. By implementing early stopping, the DistilBART model ensures that its learned patterns remain robust and applicable to a broad spectrum of medical contexts, reinforcing its reliability in generating clinically relevant medical summaries.

10. Deployment:

Upon completing successful validation and testing phases, the fine-tuned model is ready for deployment into real-world scenarios. This involves seamless integration into software systems or services, enabling the model to perform tasks such as text generation, responding to queries, or making recommendations. The deployment phase marks the transition of the fine-tuned model from the development environment to practical applications.

Example: In a specific instance, the fine-tuned DistilBART model for medical summary generation undergoes deployment after showcasing proficiency in validation and testing phases. This model is then made accessible through platforms like HuggingFace, providing real-world access to its capabilities. The deployment enables healthcare professionals or applications to utilize the model for extracting meaningful medical summaries from structured data. Users can interact with the model through HuggingFace's platform, leveraging its fine-tuned capabilities to generate accurate and concise medical summaries tailored to specific clinical scenarios.

Overview of Facebook's BART (DistilBART)

BART, or Bidirectional and Auto-Regressive Transformers, represents a breakthrough in natural language understanding and generation. When we refer to "DistilBART," the term "distilled" refers to a process of creating a more compact and computationally efficient version of the original BART model.

In the realm of neural network models, "distillation" involves training a smaller model to replicate the behavior and knowledge of a larger, more complex model. The objective is to capture the essential information and generalization capabilities of the larger model while reducing computational requirements.

In the case of DistilBART, it is a distilled version of the BART model, designed to maintain remarkable capabilities while being more resource-efficient. The distillation process involves training a smaller model to mimic the performance of the original BART model, making it suitable for applications where computational resources may be limited without sacrificing significant performance.

In summary, "distilled" in the context of DistilBART refers to the process of creating a more streamlined and resource-efficient version of the BART model, enabling effective use in scenarios with computational constraints.

For more details about BART, please read https://huggingface.co/facebook/bart-large

DistilBART demonstrates prowess not only in medical summarization, as highlighted in our case study, but also in various other natural language processing tasks. Its efficiency makes it suitable for applications such as document summarization, text generation, sentiment analysis, and more. The model's versatility allows it to adapt to different domains and tasks with relative ease.

Parameters and Architecture: DistilBART inherits its architecture from BART, incorporating bidirectional and auto-regressive transformers. The model typically consists of several layers of attention and feedforward mechanisms in both the encoder and decoder. DistilBART's architecture facilitates comprehensive language understanding and generation. It is characterized by a reduced number of parameters compared to its parent model, making it computationally more efficient while maintaining significant performance.

Training Data Set: The training of DistilBART involves leveraging a diverse and extensive dataset, which typically includes a wide range of text from various domains. The model benefits from a pre-training phase on this large dataset, exposing it to a vast array of linguistic patterns and structures. The training data may include a mixture of publicly available text, books, articles, and other written content. Following pre-training, DistilBART is fine-tuned on specific tasks, such as medical summarization, to adapt its capabilities to more specialized domains.

Fine-Tuning : Fine-tuning DistilBART involves exposing the model to domain-specific datasets, such as medical records or specialized corpora, to ensure its proficiency in task-specific applications. This process refines the model's understanding of domain-specific language nuances and improves its performance on targeted tasks.

Demo

Now, let's dive into a hands-on demonstration of fine-tuning with Facebook's DistilBART model for medical summary generation from JSON data. Follow along with the steps below:

Step 1: Setting Up the Environment

Ensure you have the required libraries installed. You can use the following commands to install them:

Step 2: Loading the Pre-Trained DistilBART Model and Tokenizer

Step 3: Preprocessing JSON Data

Preprocess the JSON data to create input text for the model:

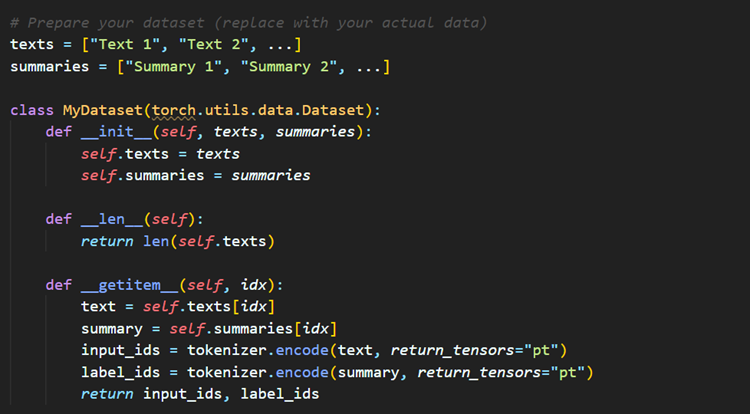

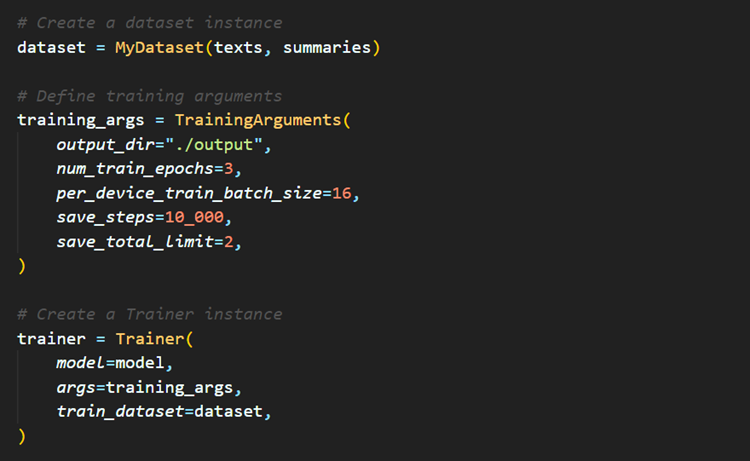

Step 4: Train and save the model

Step 5: Deploy the model

Once model is trained, that can be hosted in HuggingFace or other platform to host the same.

Here it is hosted in HuggingFace, below is the URL to access the model

https://huggingface.co/Mahalingam/DistilBART-Med-Summary

Key Takeaways

-

Effectiveness of Fine-Tuning: Fine-tuning proves to be a powerful strategy for adapting large language models to specialized tasks, unlocking their potential in domain-specific applications like medical summary generation.

-

Power of BART (DistilBART): Facebook's DistilBART stands out as an efficient and effective model, showcasing its prowess in handling intricate language tasks, particularly evident in the demanding domain of medical summarization.