Image Source: Created using Canva

Image Source: Created using Canva

Summary:

Software testers, prepare to be revolutionized! This blog will dive into a buzz topic of the current era, how Generative AI, combined with the art of 'Prompt Engineering,' is set to redefine every phase of the Software Test Life Cycle (STLC). Explore a future where your expertise meets AI's precision, making software testing more efficient, insightful, and groundbreaking than ever before!"

Learn about a range of innovative prompting techniques and master them to unearth nuanced insights and make software testing more insightful and effective. Explore the importance of mastering these prompts, understand their limitations, and how to overcome them to fine-tune your approach in testing.

This read is your concise guide to understanding and leveraging prompt methodologies to their full extent, enhancing every facet of software testing!

Introduction:

“Can the fusion of human skill and artificial intelligence reshape the landscape of software testing?" This thought-provoking question signals us to look beyond traditional testing paradigms and embrace the transformative power of Generative AI.

"We can only see a short distance ahead, but we can see plenty there that needs to be done."

Alan Turing

As we stand on the cliff of this new era in software testing, Turing's words resonate deeply. In the vast realm of software testing, the invasion of Generative AI is like the meteoric rise of a new star. The brilliance it brings, however, isn't just in its computing prowess, but in how effectively it can be communicated with. At the heart of this communication lies 'Prompt Engineering' - the meticulous art of crafting questions or statements to elicit precise responses.

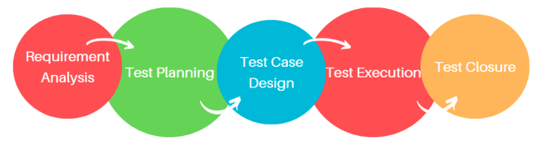

But how does this dovetail with the traditional Software Test Life Cycle (STLC)? Let's journey through each phase to uncover the synergy.

Image Source: Created using Canva

Requirement Analysis

Image Source: Created using Canva

In the emerging phase of the STLC, understanding and prioritizing testing requirements is paramount. Generative AI can be an invaluable asset here, but only if it's questioned right. With well-crafted prompts, one can quickly glean insights into:

- Which requirements are most critical?

- Are there any conflicting requirements?

- Which features demand the most exhaustive testing?

For instance, instead of vaguely asking the AI, "Which requirements are important?", one can employ prompt engineering to ask,

“Given our software's primary function of X, which testing requirements would be most critical to ensure its robustness?"

Example:

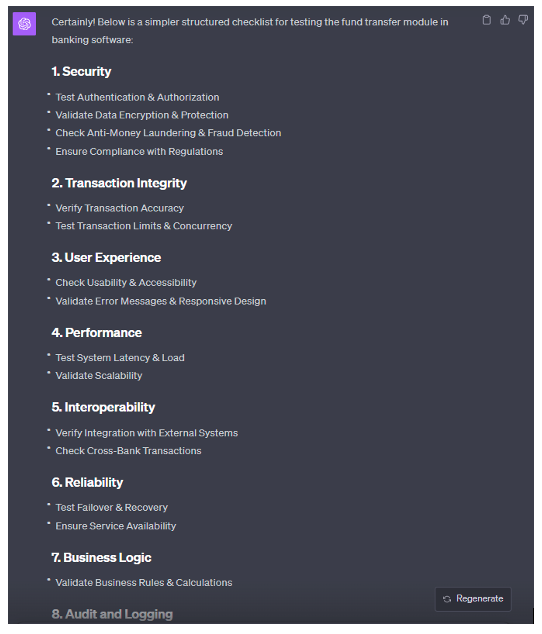

Imagine banking software that needs to test its fund transfer module. A software tester can use the AI with a prompt:

"Given the regulatory guidelines and common user behaviors, which requirements should be prioritized for the fund transfer module's testing in banking software?"

The AI might return a list of prioritized requirements like two-factor authentication checks, daily transfer limits, cross-border transaction regulations, etc. This ensures that the test is compliant and focuses on areas that matter most.

Test Planning

Image Source: By pikisuperstar on Freepik

The foundation of any successful test lies in detailed planning. This will eliminate the risk of meeting deadlines by the QA team.

Here, prompts can be framed to guide the QA lead:

- Scope determination

- Risk assessment

- Resource allocation

- Timeline projections

By prompting, AI can provide insights that might sometimes surpass human biases or overlooks.

"Considering our application's user base and functionalities, how should we prioritize our testing scope?"

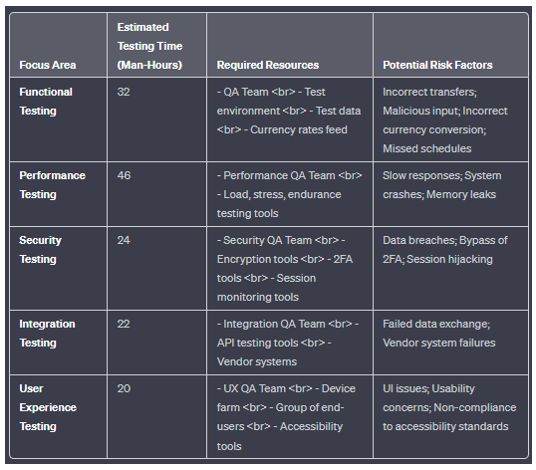

Example: Our software tester is now planning the testing activities for the bank's fund transfer module. They could ask the following prompt to get the estimated time, resources, and risk factors.

"Given the importance of the fund transfer feature in our banking application, could you leverage your extensive domain knowledge to identify critical focus areas and formulate a comprehensive test plan? A concise tabular format would be preferred for clear visibility and review. For each focus area identified, please provide:

1. Estimated Testing Time (in Man-Hours)

2. Required Resources (including teams and tools)

3. Potential Risk Factors

Based on the input, the AI can provide a tailored plan, potentially identifying areas where automation could speed up the process or flag certain tests as high-risk due to past patterns and by utilizing its extensive domain knowledge and similar applications knowledge.

Test Case Design

Image Source: Create using Freepik (Text to Image converter)

Generative AI, when properly prompted, can aid in creating test cases that are both exhaustive and efficient.

A prompt like below can be fundamental to generating the list of test cases based on the requirements and known issues.

"Given the specified requirements and known vulnerabilities of similar applications, what would be the most comprehensive test cases for our software?"

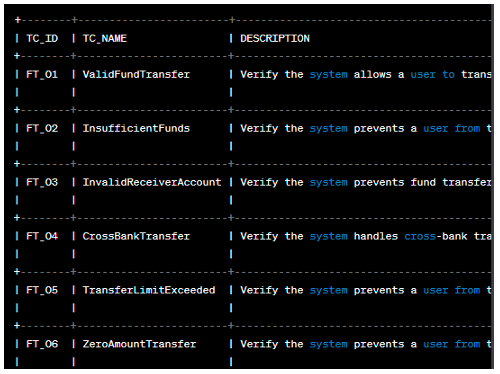

Example: For the same fund transfer module, the tester might prompt,

"Based on the prioritized requirements, suggest comprehensive test cases covering all critical scenarios. Utilize your extensive domain knowledge while creating the test cases. To make sure none of the scenarios are overlooked, your test case should cover the most common scenarios, as well as edge cases that could potentially break the feature.

For every scenario you plan to test, create a separate entry in the test case document, adhering to the following tabular structure:

- TC_ID: Provide a unique identification number for each test scenario.

- TC_NAME: Assign a succinct, descriptive name that captures the essence of the test scenario.

- DESCRIPTION: Paint a vivid picture of what the test scenario is designed to verify or uncover in the User Registration feature.

- PRIORITY: Use your expertise to prioritize the test scenario as High, Medium, or Low.

- PRE_REQUISITE: Clearly define any conditions or steps that must be satisfied before the test can be performed.

- STEPS TO BE FOLLOWED: Lay out a clear, step-by-step path that anyone could follow to reproduce the test scenario.

- EXPECTED RESULT: Describe in detail what the expected outcome should be if the feature works as designed.

- TEST DATA: Describe in detail all required test data to perform the test on the feature to ensure it works as designed"

The AI could return cases like:

Test Environment Setup

Image Source: Created using Canva, pics art

While setting up the perfect environment for testing, prompt engineering can help in foreseeing potential hiccups. Queries like, "Given our software's architecture and dependencies, what potential challenges might we face in a Linux test environment?" can preemptively address issues, ensuring smoother test runs.

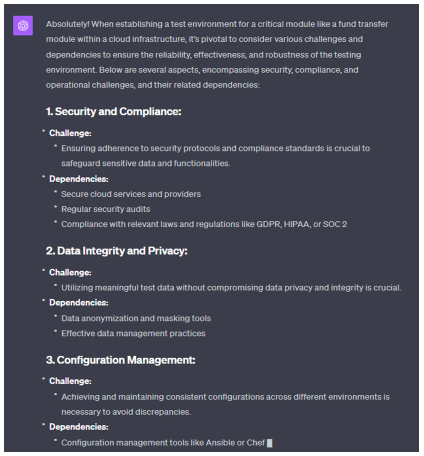

Example:

“Given the critical nature of a fund transfer module within a banking application operating in cloud infrastructure, could you leverage your extensive knowledge in software testing and cloud environments to enumerate the potential challenges and dependencies that should be meticulously considered while establishing a test environment for this module? Please elucidate any security, compliance, and operational aspects that should be regarded, and specify any dependencies that could affect the reliability, effectiveness, and robustness of the testing environment.”

AI might highlight the need for simulating real-world network conditions, ensuring data privacy, or the importance of mimicking real-world server loads.

Test Execution

Image Source: Create using Freepik (Text to Image converter)

The execution phase is where ideas are put into action. With precision in prompts, AI can assist in real-time to:

- Adapt test cases based on interim findings.

- Allocate resources dynamically.

- Predict potential bottlenecks.

Example:

As the tests for the fund transfer module commence, the tester might need real-time feedback. A prompt like below could lead to AI pointing out missed edge cases or recommending re-runs under different conditions.

"Analyze the ongoing test results and suggest any adjustments or additional tests that might be required”.

Test Closure

As testing draws to a close, it's crucial to ensure that every criterion has been met, results are well-documented, and insights are ready for stakeholders. Here, prompt engineering can be employed for tasks like, "Analyze the test results and summarize key findings and potential areas of improvement."

Example: Once testing wraps up, our tester could use AI to assist in documentation with a prompt like,

Based on the completed test cycles, please generate a concise test closure summary highlighting the critical aspects of the testing process. Include information on the total number of test cases, pass and fail rates, defects discovered and their severity, and any risks or blockers identified. Also, please incorporate insights on the effectiveness of the testing process, any areas for improvement, and recommendations for subsequent testing cycles or releases. Please structure the summary in a clear and coherent manner, focusing on the accuracy and relevancy of the provided information.

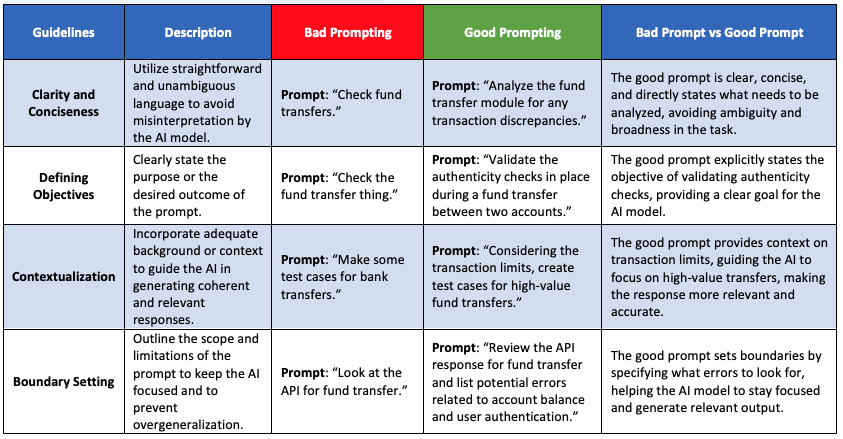

Guidelines for mastering Prompt writing

Let’s elaborate on each guideline by comparing bad prompting vs good prompting and by taking an example of a fund transfer feature in the banking domain.

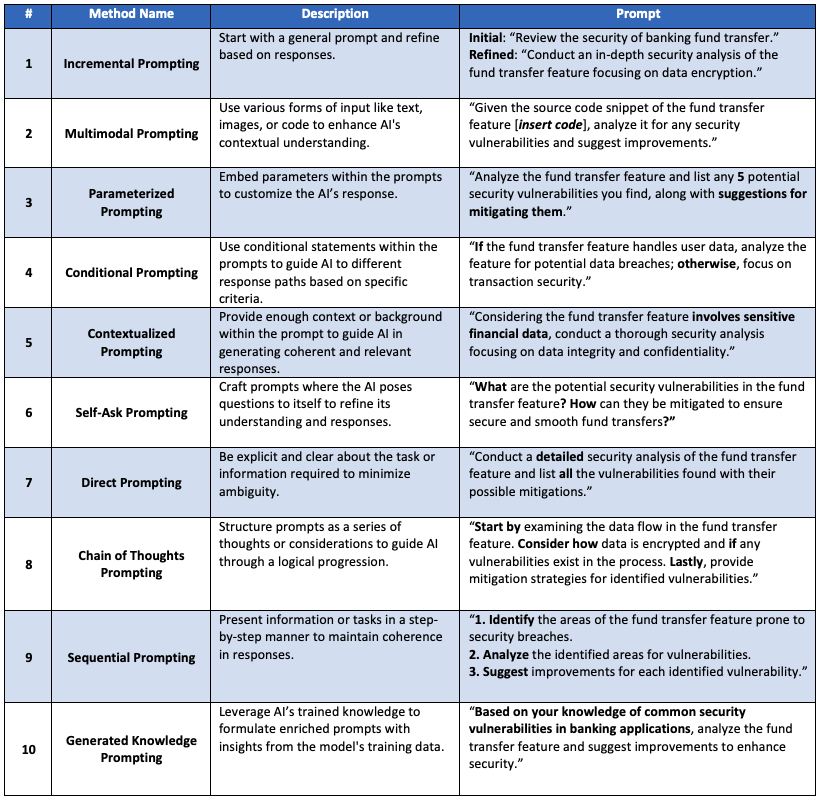

10 Diverse Prompting Methods to make most out of Generative AI models.

When we test software features, especially in critical domains like banking, e-commerce, healthcare etc., using different kinds of prompts can help us get the most out of generative AI models. By asking the AI in various ways, we can get many useful insights and make sure no stone is left unturned in examining the software’s safety and functionality.

The table below lists ten different ways we can ask AI to do same task, each with a short explanation and an example focused on checking the security of a bank's fund transfer feature. This will help testers in understanding how to create clear and effective prompts to find and address any potential issues thoroughly and efficiently. This will also help testers to understand how the output differs based on the method we use for prompting.

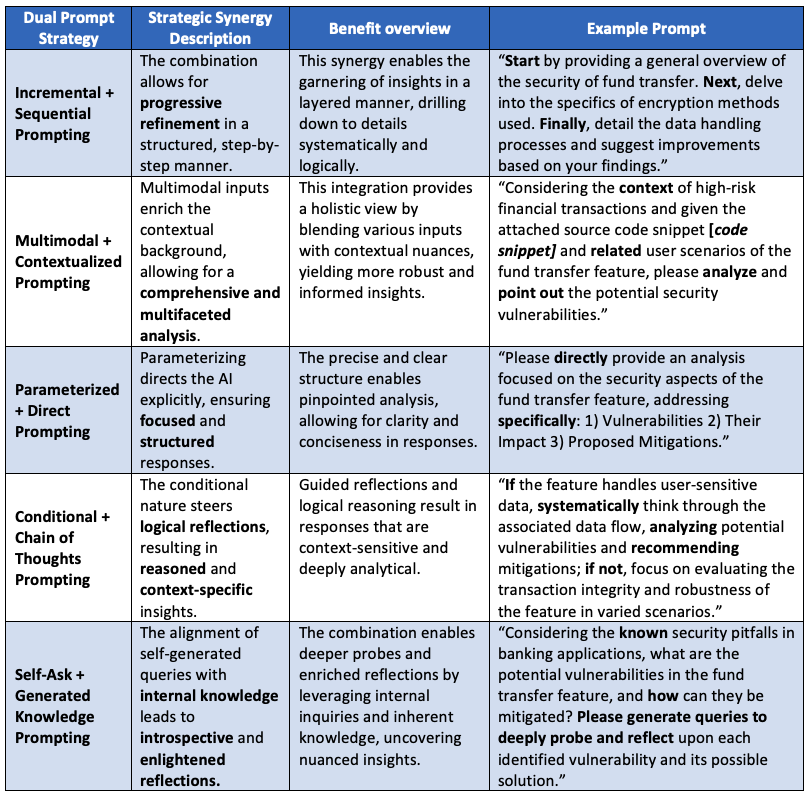

Transformative Power by Mastering Dual Prompting strategies.

As effective prompting is an underappreciated craft, especially in software testing on critical domains like banking, e-commerce, healthcare, education, etc., The art of melding two distinct prompting strategies can remarkably heighten the depth and significantly enhance the effectiveness of AI interactions.

The below table dives deep into the fusion approach by pairing complementary methods. This will enable Generative AI to focus, fostering richer responses with sharp analysis. Also, the table below includes examples to witness the transformative power of combined or dual prompting strategies.

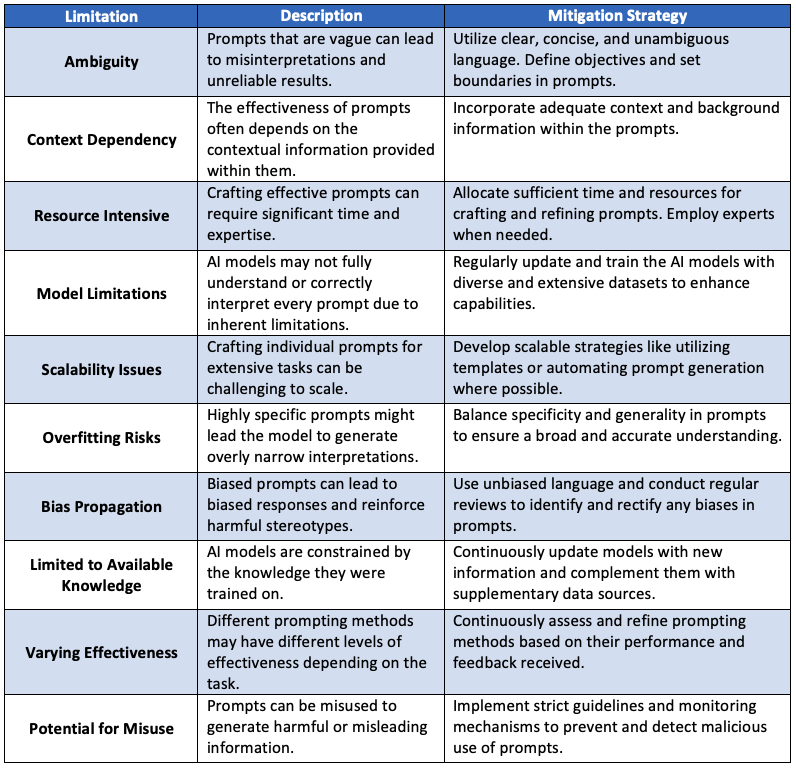

Limitations

Conclusion:

This blog has been a journey through the significance of creating effective prompts for Generative AI models, particularly within the realm of software testing. The strategies and insights shared here aim to empower readers, offering practical tools and knowledge to master the art of prompting. This mastery is crucial for those looking to enhance interactions, precision, and reliability in software applications, thereby contributing to robust software development and enhanced user trust. As you implement these prompt crafting techniques, you are not just learning, but evolving your approach to creating safer and smarter software solutions. I hope this blog serves as a valuable resource, leading to transformative outcomes in your software endeavors.

About Encora:

Encora specializes in providing software engineering services with a Nearshore advantage especially well-suited to established and start-up software companies, and industry. We have been headquartered in Silicon Valley for over 20 years, and have engineering centers in Latin America (Costa Rica, Peru, Bolivia and Colombia). The Encora model is highly collaborative, Agile, English language, same U.S. time-zone, immediately available engineering resources, economical and quality engineering across the Product Development Lifecycle.

Author Bio

- Name: Keerthi Vaddi

- Position at Encora: Sr. QA Manager

- Education- B.Tech(Computer Science & Engineering)

- Experience - 12 Years

- LinkedIn Profile: https://www.linkedin.com/in/keerthi-vaddi-730a9a1b9/