In the previous part of this series, the key characteristics desirable on a machine learning model capable of Anomaly Detection were discussed, as well as some of the classic techniques that could be applied to solve it. Despite their importance in the history of this area, most of them fall short of providing all the features, but new techniques keep being developed and improved, year after year.

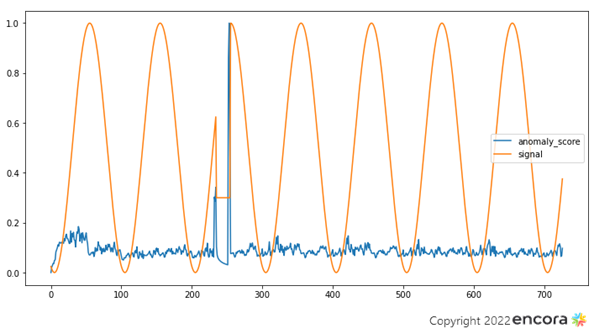

Given the various requirements of a modern Machine Learning solution for anomaly detection, one of the best solutions available is the Robust Random Cut Forest (RRCF) algorithm [Guha et al, 2016], published in 2016 by Amazon engineers. As the name may suggest, it is a tree-based (or, more specifically, a tree ensemble or forest) with built-in approaches to bring robustness to an already scalable unsupervised technique.

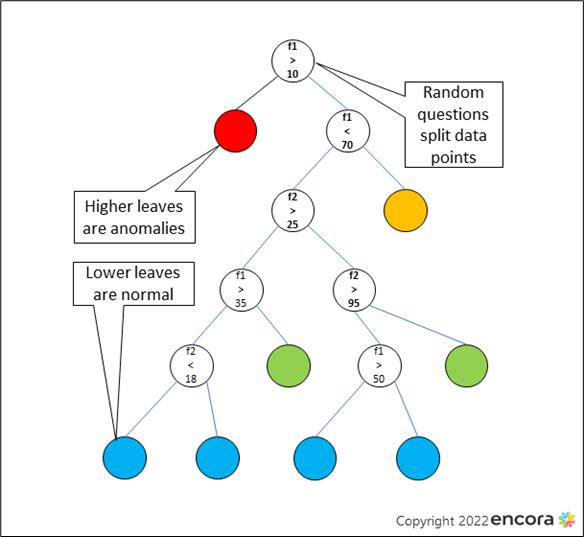

In order to understand it, it’s important to understand the motivation behind its predecessor, the Isolation Forest. Isolation Forests build trees that, instead of classifying entries in previously known groups, try to better separate entry points in a tree, spreading them on a binary structure in order to maximize the number of elements that can be separated. Then, it infers how unique elements are based on the depth of the tree in which the distinction was made.

Isolation Forests use the tree depth and random comparisons to create a tree

Isolation Forests use the tree depth and random comparisons to create a tree

Despite working with multiple variables, the Isolation Forests can have unreasonably long trees if a large number of irrelevant variables are considered, since these decisions will not be impactful on the final decisions, given they won’t isolate data meaningfully . It also suffers from anomaly collusion – if a group of similar anomalies exist in a given tree, the relevant decisions that separate this anomalous cluster from the rest might be distant from the actual separation of one anomaly from another.

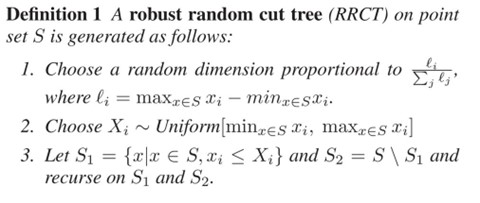

RRCF solves these issues with two key changes to the Isolation Forest algorithm: first, it takes into consideration how diverse each dimension or variable is among the remaining datapoints to be added to the tree, and selects the dimensions that would lead to a better separation of the remaining data. Then, it considers the anomaly level of a datapoint not by its depth, but by how much it (or, rather, its most divergent subtree) modifies the tree if it is added/removed - a measurement called displacement.

High level algorithm for tree creation: dimensions and comparisons are not chosen in a completely random fashion

The first change forces the algorithm to avoid features that are irrelevant for a given partition of the data – which can be either spurious dimensions (I.e., irrelevant for the problem in general) or dimensions that are not relevant for further separating this subproblem (or that are less relevant for this subgroup of datapoints). This approach minimizes the necessity of feature pruning or directed engineering of the problems, and allows for more exploratory models that consider anomalies in a broader range of values – even if a certain attribute isn’t consistently important, it might still be used in a subproblem of individual cut trees.

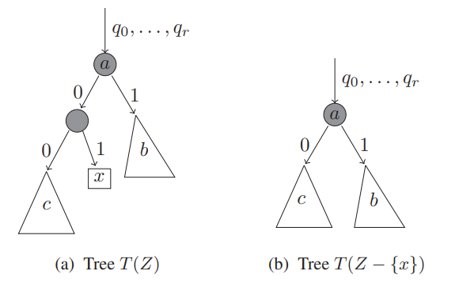

The second change further adds robustness to the algorithm by effectively ignoring the number of decisions taken so far (since these decisions are taken stochastically, as seen in the pseudocode above , their amount is not as relevant as its effect on the dataset) and, instead, considering only how much an isolation decision affects the remaining datapoints. Put in another way, this technique directly measures how many datapoints would be isolated ‘against’ the isolated target , putting it into perspective against the whole tree (and the entire population). This approach is further enhanced by also considering how each subtree is isolated from its sibling subtree, and the greatest isolation is used to describe a datapoint. This means that even if a datapoint is only isolated from another 3, these four datapoints could be isolated from another 300, representing a 100:1 isolation. This proportion is relevant no matter if it happened in the first layers of the three or in the last layers, as long as it is influencing many datapoints.

The displacement of x is proportional to the size of c in comparison to the total tree size

In summary, the RRCF algorithm measures how many datapoints are separated by a given decision in an isolation subtree, considering that each decision (and each subtree) was built using features that reliably divide data underneath it. Features that are less relevant are less likely to be used (and even if they are used, they are less likely to influence the results), and anomalous clusters will not collude the results (unless, of course, they are so large they are not anomalous anymore).

The RRCF, as a forest-based technique, also provides great support for horizontal scalability: each tree can be built independently from others, and their results can be combined after processing is done. The technique also supports variable tree depths, tree sizes and forests sizes. Thanks to sampling, the trees do not need to grow proportionally to the population if the subset used to build trees is representative of the general population . Finally, trees are among the most explainable Machine Learning techniques due to the intuitive notion of binary search trees : domain specialists are not required to understand Machine Learning in order to interpret the result of a series of binary questions.

Finally, RRCF provides an optimized algorithm for tree building that is computationally efficient and can be done continuously, even in online scenarios, thanks to a data structure that stores not only the decision being taken on each tree node but also the bounding box of the subtree and each of its branches, facilitating analysis and updates of whole segments without the need of traversing an d rebuilding the tree over and over.

Despite all the advantages, RRCF alone doesn’t solve all issues directly: it still requires data to be structured in a way that all necessary information to evaluate a data point is contained in it – it supports evolving models with data flow, but it doesn’t use temporal information directly. It also doesn’t provide explainability directly either – tree-based models are naturally more explainable, but without a clever strategy to use these structures, a forest can be cumbersome to explore, and conclusions might not be intuitive or easily explainable without further post-processing. In the next part we will explore some improvements that can be added to RRCF and their results.

References

- Robust Random Cut Forests. Sudipto Guha, Nina Mishra, Gourav Roy, Okke Schrijvers Proceedings of The 33rd International Conference on Machine Learning, PMLR 48:2712-2721, 2016.

- Forecasting at scale. Taylor SJ, Letham B. 2017. Forecasting at scale. PeerJ Preprints 5:e3190v2 https://doi.org/10.7287/peerj.preprints.3190v2

- Anomaly Detection: a Survey. Varun Chandola, Arindam Banerjee, and Vipin Kumar. 2009. Anomaly detection: A survey. ACM Comput. Surv. 41, 3, Article 15 (July 2009), 58 pages. https://doi.org/10.1145/1541880.1541882

Acknowledgement

This article was written by Ivan Caramello, Data Scientist and member of the Data Science & Engineering Tech Practices at Encora. Thanks to Daniel Franco, Alicia Tasseli, Caio Vinicius Dadauto , João Caleffi and Kathleen McCabe for reviews and insights.

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.